For as long as computers have existed, physicists have used them as tools to understand, predict and model the natural world. Computing experts, for their part, have used advances in physics to develop machines that are faster, smarter and more ubiquitous than ever. This collection celebrates the latest phase in this symbiotic relationship, as the rise of artificial intelligence and quantum computing opens up new possibilities in basic and applied research

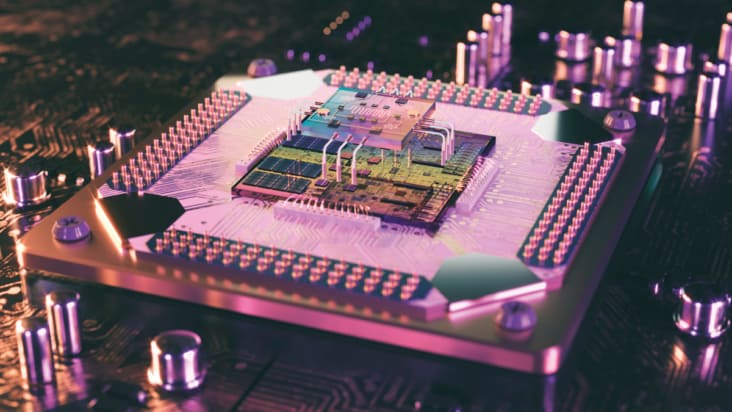

As quantum computing matures, will decades of engineering give silicon qubits an edge? Fernando Gonzalez-Zalba, Tsung-Yeh Yang and Alessandro Rossi think so

Physicist and Raspberry Pi inventor Eben Upton explains how simple computers are becoming integral to the Internet of Things

Physics World journalists discuss the week’s highlights

James McKenzie explains how Tim Berners-Lee's invention of the World Wide Web at CERN has revolutionized how we trade.

Tim Berners-Lee predicts the future of online publishing in an article he wrote for Physics World in 1992

Jess Wade illustrates the history of the World Wide Web, from the technology that enabled it to the staple it is today

Emerging technologies shaping our connected world

Fifth episode in mini-series revisits the birth of the Web and the challenges it now faces

Computing is transforming scientific research, but are researchers and software code adapting at the same rate? Benjamin Skuse finds out

Read article: Why North America has a ‘tornado alley’ and South America doesn’t

Read article: Why North America has a ‘tornado alley’ and South America doesn’t

There’s a scientific reason why Twisters is set in the US Great Plains rather than Argentina, and it has to do with the Gulf of Mexico

Read article: Classical models of gravitational field show flaws close to the Earth

Read article: Classical models of gravitational field show flaws close to the Earth

New gravitational field model quantifies the "divergence problem" identified in 2022

Read article: Simple equation predicts how quickly animals flap their wings

Read article: Simple equation predicts how quickly animals flap their wings

Relationship between mass, wing area and wingbeat frequency holds true for insects, bats, birds, whales and even a flapping robot

Read article: Nuclear physicists tame radius calculation problem

Read article: Nuclear physicists tame radius calculation problem

New "wavefunction matching" method correctly predicts nuclear radii of elements with atomic numbers from 2 to 58

Read article: Micro-tornadoes help transport nutrients within egg cells

Read article: Micro-tornadoes help transport nutrients within egg cells

New work sheds light on vortex flows involved in mixing and transporting ooplasmic components that cells need to develop

Read article: Frugal approach to computer modelling can reduce carbon emissions

Read article: Frugal approach to computer modelling can reduce carbon emissions

Simulations should be designed to minimize energy consumption, say physicists

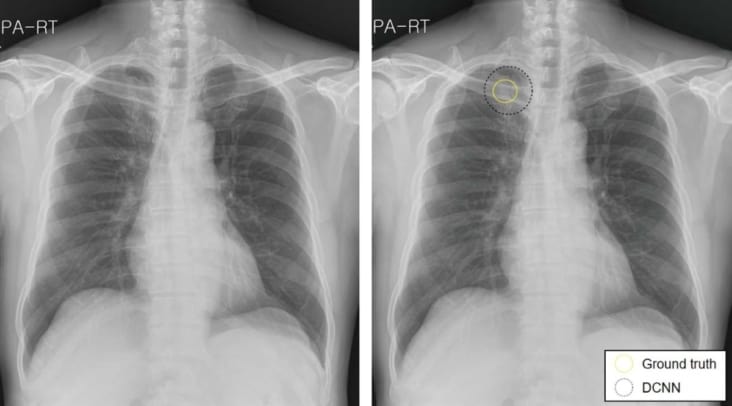

Introducing artificial intelligence into the clinical workflow helps radiologists detect lung cancer lesions on chest X-rays and dismiss false-positives

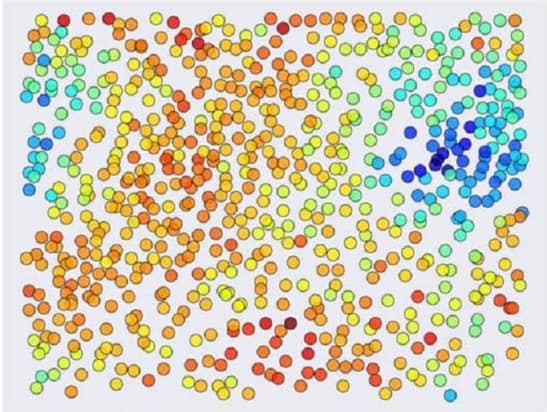

Algorithms help materials scientists recognize patterns in structure-function relationships

A deep learning algorithm detects brain haemorrhages on head CT scans with comparable performance to highly trained radiologists

An artificial intelligence model can identify patients with intermittent atrial fibrillation from scans performed during normal heart rhythm

Proof-of-concept demonstration done using two superconducting qubits

An image-based artificial intelligence framework predicts a personalized radiation dose that minimizes the risk of treatment failure

A machine learning algorithm can read electroencephalograms as well as clinicians

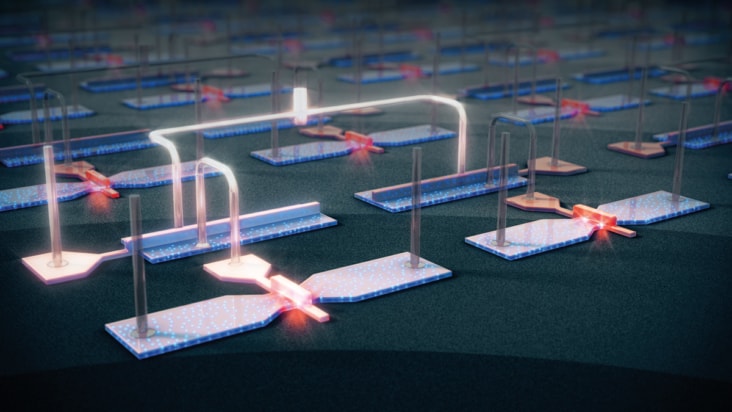

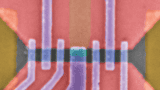

Read article: Spins hop between quantum dots in new quantum processor

Read article: Spins hop between quantum dots in new quantum processor

Hopping-based logic achieved at high fidelity

Read article: ‘Poor man’s Majoranas’ offer testbed for studying possible qubits

Read article: ‘Poor man’s Majoranas’ offer testbed for studying possible qubits

A new approach could put Majorana particles on track to become a novel qubit platform, but some scientists doubt the results’ validity

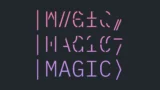

Read article: Quantum error correction produces better ‘magic’ states

Read article: Quantum error correction produces better ‘magic’ states

Proof-of-concept demonstration yields encoded magic states that are robust against any single-qubit error

Read article: Schrödinger’s cat makes a better qubit in critical regime

Read article: Schrödinger’s cat makes a better qubit in critical regime

Researchers discover that operating close to a phase transition produces optimal error suppression in so-called cat qubits

Read article: New ion trapping approach could help quantum computers scale up

Read article: New ion trapping approach could help quantum computers scale up

Confining ions with static magnetic and electric fields instead of an oscillating radiofrequency field reduces heating and gives better position control

Read article: Controllable Cooper pair splitter could separate entangled electrons on demand

Read article: Controllable Cooper pair splitter could separate entangled electrons on demand

Proposed device might aid the development of quantum computers

Featuring world-leading journals, news and books, dedicated to supporting and improving research across the field, from fundamental science through to novel applications and facilities.